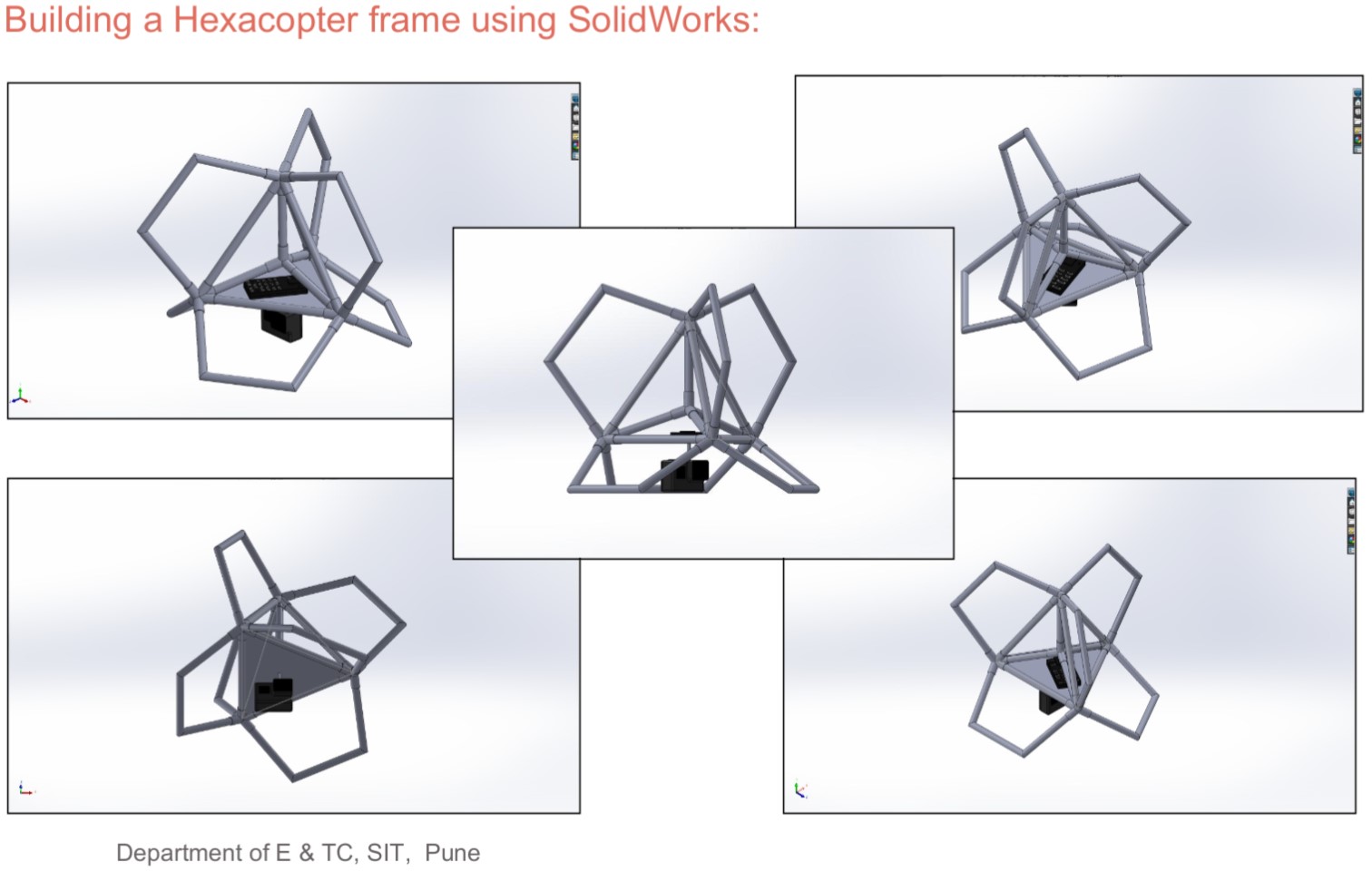

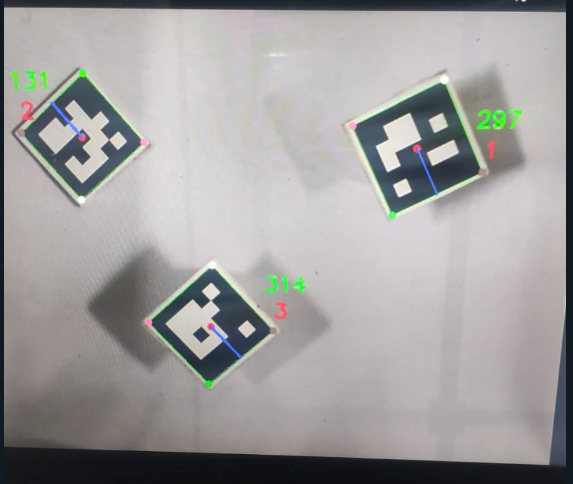

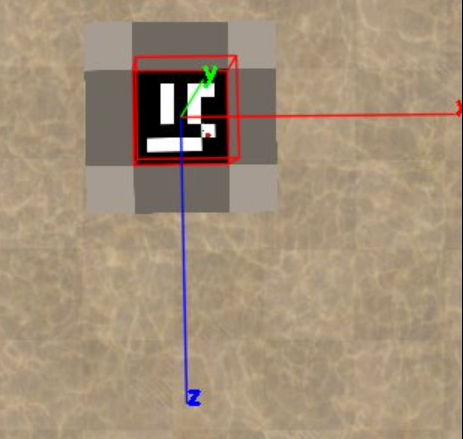

Optimizing Flight Control and Minimizing Latency Perception in Dynamic Environments: A Reinforcement Learning Approach to Trajectory Planning and Obstacle Avoidance for Omnidirectional Hexacopters Using ArUco Markers

|  |

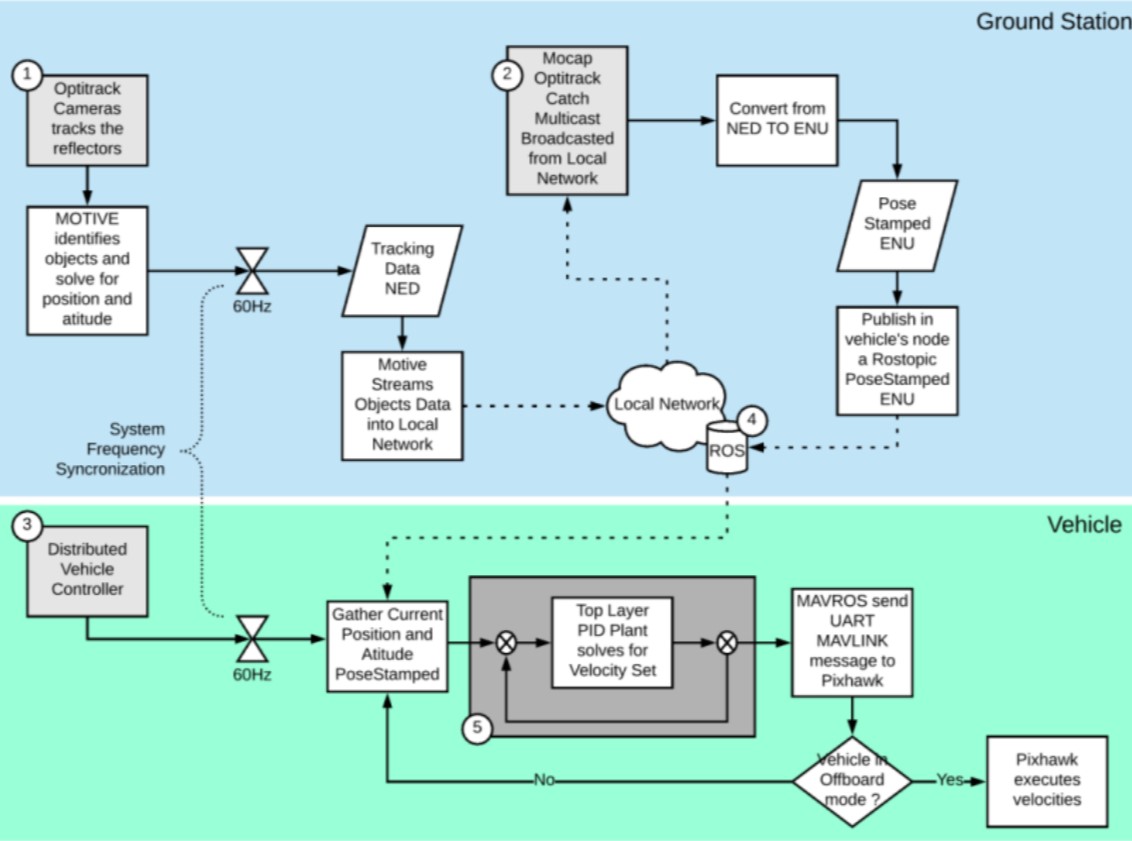

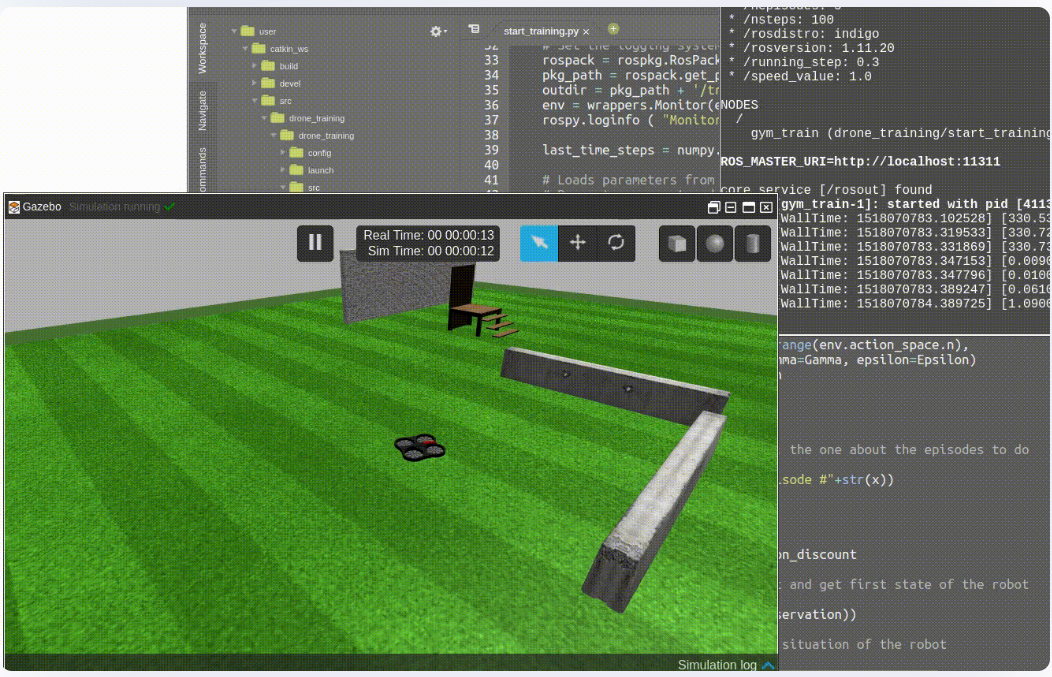

Autonomous UAV process using ROS: Implementing the simulation environment and autonomous UAV parameters such as body frames, position, velocity, angular rotation using ROSNODES and ROSSERVICES.

ROS and Reinforcement Learning: Creating a ROS environment for simulating optimal time flight and planning trajectories through checkpoints. Developing Model predictive control algorithms for learning policies through better rewards. Considering parameters such as position, velocity, body rates, rotors rotational speed, unit quaternion rotation model building using state, action, and reward and compare them with given state-of-the-art. Reinforcement Learning (RL) algorithms. Deploying the algorithm into a real-time aerial vehicle and fine-tuning the motor equations with additional computational behavior.

|  |  |